Note

This page was generated from docs/tutorials/09_credit_risk_analysis.ipynb.

Credit Risk Analysis#

Introduction#

This tutorial shows how quantum algorithms can be used for credit risk analysis. More precisely, how Quantum Amplitude Estimation (QAE) can be used to estimate risk measures with a quadratic speed-up over classical Monte Carlo simulation. The tutorial is based on the following papers:

Quantum Risk Analysis. Stefan Woerner, Daniel J. Egger. [Woerner2019]

Credit Risk Analysis using Quantum Computers. Egger et al. (2019) [Egger2019]

A general introduction to QAE can be found in the following paper:

The structure of the tutorial is as follows:

[1]:

import numpy as np

import matplotlib.pyplot as plt

from qiskit import QuantumRegister, QuantumCircuit

from qiskit.circuit.library import IntegerComparator

from qiskit_algorithms import IterativeAmplitudeEstimation, EstimationProblem

from qiskit_aer.primitives import Sampler

Problem Definition#

In this tutorial we want to analyze the credit risk of a portfolio of \(K\) assets. The default probability of every asset \(k\) follows a Gaussian Conditional Independence model, i.e., given a value \(z\) sampled from a latent random variable \(Z\) following a standard normal distribution, the default probability of asset \(k\) is given by

where \(F\) denotes the cumulative distribution function of \(Z\), \(p_k^0\) is the default probability of asset \(k\) for \(z=0\) and \(\rho_k\) is the sensitivity of the default probability of asset \(k\) with respect to \(Z\). Thus, given a concrete realization of \(Z\) the individual default events are assumed to be independent from each other.

We are interested in analyzing risk measures of the total loss

where \(\lambda_k\) denotes the loss given default of asset \(k\), and given \(Z\), \(X_k(Z)\) denotes a Bernoulli variable representing the default event of asset \(k\). More precisely, we are interested in the expected value \(\mathbb{E}[L]\), the Value at Risk (VaR) of \(L\) and the Conditional Value at Risk of \(L\) (also called Expected Shortfall). Where VaR and CVaR are defined as

with confidence level \(\alpha \in [0, 1]\), and

For more details on the considered model, see, e.g., Regulatory Capital Modeling for Credit Risk. Marek Rutkowski, Silvio Tarca

The problem is defined by the following parameters:

number of qubits used to represent \(Z\), denoted by \(n_z\)

truncation value for \(Z\), denoted by \(z_{\text{max}}\), i.e., Z is assumed to take \(2^{n_z}\) equidistant values in \(\{-z_{max}, ..., +z_{max}\}\)

the base default probabilities for each asset \(p_0^k \in (0, 1)\), \(k=1, ..., K\)

sensitivities of the default probabilities with respect to \(Z\), denoted by \(\rho_k \in [0, 1)\)

loss given default for asset \(k\), denoted by \(\lambda_k\)

confidence level for VaR / CVaR \(\alpha \in [0, 1]\).

[2]:

# set problem parameters

n_z = 2

z_max = 2

z_values = np.linspace(-z_max, z_max, 2**n_z)

p_zeros = [0.15, 0.25]

rhos = [0.1, 0.05]

lgd = [1, 2]

K = len(p_zeros)

alpha = 0.05

Uncertainty Model#

We now construct a circuit that loads the uncertainty model. This can be achieved by creating a quantum state in a register of \(n_z\) qubits that represents \(Z\) following a standard normal distribution. This state is then used to control single qubit Y-rotations on a second qubit register of \(K\) qubits, where a \(|1\rangle\) state of qubit \(k\) represents the default event of asset \(k\). The resulting quantum state can be written as

where we denote by \(z_i\) the \(i\)-th value of the discretized and truncated \(Z\) [Egger2019].

[3]:

from qiskit_finance.circuit.library import GaussianConditionalIndependenceModel as GCI

u = GCI(n_z, z_max, p_zeros, rhos)

[4]:

u.draw()

[4]:

┌───────┐

q_0: ┤0 ├

│ │

q_1: ┤1 ├

│ P(X) │

q_2: ┤2 ├

│ │

q_3: ┤3 ├

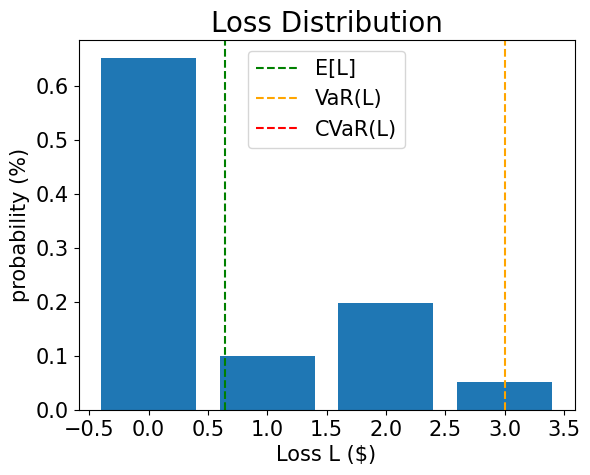

└───────┘We now use the simulator to validate the circuit that constructs \(|\Psi\rangle\) and compute the corresponding exact values for

expected loss \(\mathbb{E}[L]\)

PDF and CDF of \(L\)

value at risk \(VaR(L)\) and corresponding probability

conditional value at risk \(CVaR(L)\)

[5]:

u_measure = u.measure_all(inplace=False)

sampler = Sampler()

job = sampler.run(u_measure)

binary_probabilities = job.result().quasi_dists[0].binary_probabilities()

[6]:

# analyze uncertainty circuit and determine exact solutions

p_z = np.zeros(2**n_z)

p_default = np.zeros(K)

values = []

probabilities = []

num_qubits = u.num_qubits

for i, prob in binary_probabilities.items():

# extract value of Z and corresponding probability

i_normal = int(i[-n_z:], 2)

p_z[i_normal] += prob

# determine overall default probability for k

loss = 0

for k in range(K):

if i[K - k - 1] == "1":

p_default[k] += prob

loss += lgd[k]

values += [loss]

probabilities += [prob]

values = np.array(values)

probabilities = np.array(probabilities)

expected_loss = np.dot(values, probabilities)

losses = np.sort(np.unique(values))

pdf = np.zeros(len(losses))

for i, v in enumerate(losses):

pdf[i] += sum(probabilities[values == v])

cdf = np.cumsum(pdf)

i_var = np.argmax(cdf >= 1 - alpha)

exact_var = losses[i_var]

exact_cvar = np.dot(pdf[(i_var + 1) :], losses[(i_var + 1) :]) / sum(pdf[(i_var + 1) :])

/tmp/ipykernel_4478/3771459203.py:36: RuntimeWarning: invalid value encountered in scalar divide

exact_cvar = np.dot(pdf[(i_var + 1) :], losses[(i_var + 1) :]) / sum(pdf[(i_var + 1) :])

[7]:

print("Expected Loss E[L]: %.4f" % expected_loss)

print("Value at Risk VaR[L]: %.4f" % exact_var)

print("P[L <= VaR[L]]: %.4f" % cdf[exact_var])

print("Conditional Value at Risk CVaR[L]: %.4f" % exact_cvar)

Expected Loss E[L]: 0.6465

Value at Risk VaR[L]: 3.0000

P[L <= VaR[L]]: 1.0000

Conditional Value at Risk CVaR[L]: nan

[8]:

# plot loss PDF, expected loss, var, and cvar

plt.bar(losses, pdf)

plt.axvline(expected_loss, color="green", linestyle="--", label="E[L]")

plt.axvline(exact_var, color="orange", linestyle="--", label="VaR(L)")

plt.axvline(exact_cvar, color="red", linestyle="--", label="CVaR(L)")

plt.legend(fontsize=15)

plt.xlabel("Loss L ($)", size=15)

plt.ylabel("probability (%)", size=15)

plt.title("Loss Distribution", size=20)

plt.xticks(size=15)

plt.yticks(size=15)

plt.show()

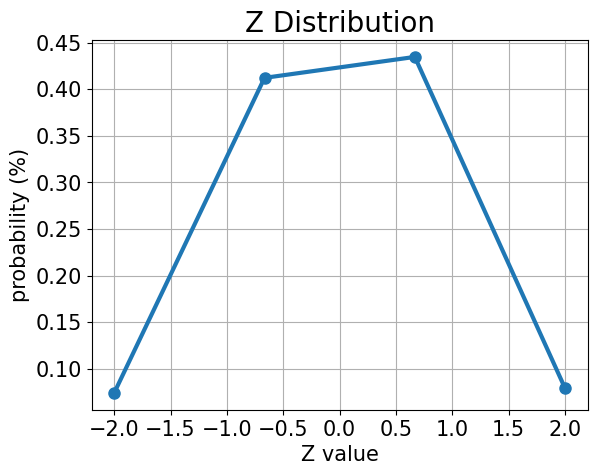

[9]:

# plot results for Z

plt.plot(z_values, p_z, "o-", linewidth=3, markersize=8)

plt.grid()

plt.xlabel("Z value", size=15)

plt.ylabel("probability (%)", size=15)

plt.title("Z Distribution", size=20)

plt.xticks(size=15)

plt.yticks(size=15)

plt.show()

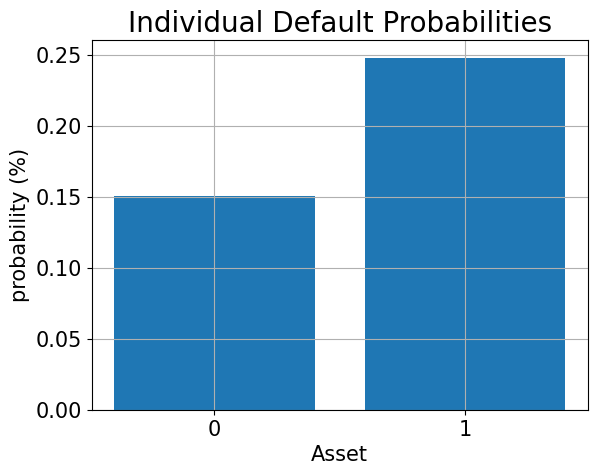

[10]:

# plot results for default probabilities

plt.bar(range(K), p_default)

plt.xlabel("Asset", size=15)

plt.ylabel("probability (%)", size=15)

plt.title("Individual Default Probabilities", size=20)

plt.xticks(range(K), size=15)

plt.yticks(size=15)

plt.grid()

plt.show()

Expected Loss#

To estimate the expected loss, we first apply a weighted sum operator to sum up individual losses to total loss:

The required number of qubits to represent the result is given by

Once we have the total loss distribution in a quantum register, we can use the techniques described in [Woerner2019] to map a total loss \(L \in \{0, ..., 2^{n_s}-1\}\) to the amplitude of an objective qubit by an operator

which allows to run amplitude estimation to evaluate the expected loss.

[11]:

# add Z qubits with weight/loss 0

from qiskit.circuit.library import WeightedAdder

agg = WeightedAdder(n_z + K, [0] * n_z + lgd)

[12]:

from qiskit.circuit.library import LinearAmplitudeFunction

# define linear objective function

breakpoints = [0]

slopes = [1]

offsets = [0]

f_min = 0

f_max = sum(lgd)

c_approx = 0.25

objective = LinearAmplitudeFunction(

agg.num_sum_qubits,

slope=slopes,

offset=offsets,

# max value that can be reached by the qubit register (will not always be reached)

domain=(0, 2**agg.num_sum_qubits - 1),

image=(f_min, f_max),

rescaling_factor=c_approx,

breakpoints=breakpoints,

)

Create the state preparation circuit:

[13]:

# define the registers for convenience and readability

qr_state = QuantumRegister(u.num_qubits, "state")

qr_sum = QuantumRegister(agg.num_sum_qubits, "sum")

qr_carry = QuantumRegister(agg.num_carry_qubits, "carry")

qr_obj = QuantumRegister(1, "objective")

# define the circuit

state_preparation = QuantumCircuit(qr_state, qr_obj, qr_sum, qr_carry, name="A")

# load the random variable

state_preparation.append(u.to_gate(), qr_state)

# aggregate

state_preparation.append(agg.to_gate(), qr_state[:] + qr_sum[:] + qr_carry[:])

# linear objective function

state_preparation.append(objective.to_gate(), qr_sum[:] + qr_obj[:])

# uncompute aggregation

state_preparation.append(agg.to_gate().inverse(), qr_state[:] + qr_sum[:] + qr_carry[:])

# draw the circuit

state_preparation.draw()

[13]:

┌───────┐┌────────┐ ┌───────────┐

state_0: ┤0 ├┤0 ├──────┤0 ├

│ ││ │ │ │

state_1: ┤1 ├┤1 ├──────┤1 ├

│ P(X) ││ │ │ │

state_2: ┤2 ├┤2 ├──────┤2 ├

│ ││ │ │ │

state_3: ┤3 ├┤3 ├──────┤3 ├

└───────┘│ adder │┌────┐│ adder_dg │

objective: ─────────┤ ├┤2 ├┤ ├

│ ││ ││ │

sum_0: ─────────┤4 ├┤0 F ├┤4 ├

│ ││ ││ │

sum_1: ─────────┤5 ├┤1 ├┤5 ├

│ │└────┘│ │

carry: ─────────┤6 ├──────┤6 ├

└────────┘ └───────────┘Before we use QAE to estimate the expected loss, we validate the quantum circuit representing the objective function by just simulating it directly and analyzing the probability of the objective qubit being in the \(|1\rangle\) state, i.e., the value QAE will eventually approximate.

[14]:

state_preparation_measure = state_preparation.measure_all(inplace=False)

sampler = Sampler()

job = sampler.run(state_preparation_measure)

binary_probabilities = job.result().quasi_dists[0].binary_probabilities()

[15]:

# evaluate the result

value = 0

for i, prob in binary_probabilities.items():

if prob > 1e-6 and i[-(len(qr_state) + 1) :][0] == "1":

value += prob

print("Exact Expected Loss: %.4f" % expected_loss)

print("Exact Operator Value: %.4f" % value)

print("Mapped Operator value: %.4f" % objective.post_processing(value))

Exact Expected Loss: 0.6465

Exact Operator Value: 0.3818

Mapped Operator value: 0.5973

Next we run QAE to estimate the expected loss with a quadratic speed-up over classical Monte Carlo simulation.

[16]:

# set target precision and confidence level

epsilon = 0.01

alpha = 0.05

problem = EstimationProblem(

state_preparation=state_preparation,

objective_qubits=[len(qr_state)],

post_processing=objective.post_processing,

)

# construct amplitude estimation

ae = IterativeAmplitudeEstimation(

epsilon_target=epsilon, alpha=alpha, sampler=Sampler(run_options={"shots": 100, "seed": 75})

)

result = ae.estimate(problem)

# print results

conf_int = np.array(result.confidence_interval_processed)

print("Exact value: \t%.4f" % expected_loss)

print("Estimated value:\t%.4f" % result.estimation_processed)

print("Confidence interval: \t[%.4f, %.4f]" % tuple(conf_int))

Exact value: 0.6465

Estimated value: 0.6863

Confidence interval: [0.6175, 0.7552]

Cumulative Distribution Function#

Instead of the expected loss (which could also be estimated efficiently using classical techniques) we now estimate the cumulative distribution function (CDF) of the loss. Classically, this either involves evaluating all the possible combinations of defaulting assets, or many classical samples in a Monte Carlo simulation. Algorithms based on QAE have the potential to significantly speed up this analysis in the future.

To estimate the CDF, i.e., the probability \(\mathbb{P}[L \leq x]\), we again apply \(\mathcal{S}\) to compute the total loss, and then apply a comparator that for a given value \(x\) acts as

The resulting quantum state can be written as

where we directly assume the summed up loss values and corresponding probabilities instead of presenting the details of the uncertainty model.

The CDF(\(x\)) equals the probability of measuring \(|1\rangle\) in the objective qubit and QAE can be directly used to estimate it.

[17]:

# set x value to estimate the CDF

x_eval = 2

comparator = IntegerComparator(agg.num_sum_qubits, x_eval + 1, geq=False)

comparator.draw()

[17]:

┌──────┐

state_0: ┤0 ├

│ │

state_1: ┤1 ├

│ cmp │

compare: ┤2 ├

│ │

a15: ┤3 ├

└──────┘[18]:

def get_cdf_circuit(x_eval):

# define the registers for convenience and readability

qr_state = QuantumRegister(u.num_qubits, "state")

qr_sum = QuantumRegister(agg.num_sum_qubits, "sum")

qr_carry = QuantumRegister(agg.num_carry_qubits, "carry")

qr_obj = QuantumRegister(1, "objective")

qr_compare = QuantumRegister(1, "compare")

# define the circuit

state_preparation = QuantumCircuit(qr_state, qr_obj, qr_sum, qr_carry, name="A")

# load the random variable

state_preparation.append(u, qr_state)

# aggregate

state_preparation.append(agg, qr_state[:] + qr_sum[:] + qr_carry[:])

# comparator objective function

comparator = IntegerComparator(agg.num_sum_qubits, x_eval + 1, geq=False)

state_preparation.append(comparator, qr_sum[:] + qr_obj[:] + qr_carry[:])

# uncompute aggregation

state_preparation.append(agg.inverse(), qr_state[:] + qr_sum[:] + qr_carry[:])

return state_preparation

state_preparation = get_cdf_circuit(x_eval)

Again, we first use quantum simulation to validate the quantum circuit.

[19]:

state_preparation.draw()

[19]:

┌───────┐┌────────┐ ┌───────────┐

state_0: ┤0 ├┤0 ├────────┤0 ├

│ ││ │ │ │

state_1: ┤1 ├┤1 ├────────┤1 ├

│ P(X) ││ │ │ │

state_2: ┤2 ├┤2 ├────────┤2 ├

│ ││ │ │ │

state_3: ┤3 ├┤3 ├────────┤3 ├

└───────┘│ adder │┌──────┐│ adder_dg │

objective: ─────────┤ ├┤2 ├┤ ├

│ ││ ││ │

sum_0: ─────────┤4 ├┤0 ├┤4 ├

│ ││ cmp ││ │

sum_1: ─────────┤5 ├┤1 ├┤5 ├

│ ││ ││ │

carry: ─────────┤6 ├┤3 ├┤6 ├

└────────┘└──────┘└───────────┘[20]:

state_preparation_measure = state_preparation.measure_all(inplace=False)

sampler = Sampler()

job = sampler.run(state_preparation_measure)

binary_probabilities = job.result().quasi_dists[0].binary_probabilities()

[21]:

# evaluate the result

var_prob = 0

for i, prob in binary_probabilities.items():

if prob > 1e-6 and i[-(len(qr_state) + 1) :][0] == "1":

var_prob += prob

print("Operator CDF(%s)" % x_eval + " = %.4f" % var_prob)

print("Exact CDF(%s)" % x_eval + " = %.4f" % cdf[x_eval])

Operator CDF(2) = 0.9707

Exact CDF(2) = 0.9492

Next we run QAE to estimate the CDF for a given \(x\).

[22]:

# set target precision and confidence level

epsilon = 0.01

alpha = 0.05

problem = EstimationProblem(state_preparation=state_preparation, objective_qubits=[len(qr_state)])

# construct amplitude estimation

ae_cdf = IterativeAmplitudeEstimation(

epsilon_target=epsilon, alpha=alpha, sampler=Sampler(run_options={"shots": 100, "seed": 75})

)

result_cdf = ae_cdf.estimate(problem)

# print results

conf_int = np.array(result_cdf.confidence_interval)

print("Exact value: \t%.4f" % cdf[x_eval])

print("Estimated value:\t%.4f" % result_cdf.estimation)

print("Confidence interval: \t[%.4f, %.4f]" % tuple(conf_int))

Exact value: 0.9492

Estimated value: 0.9590

Confidence interval: [0.9577, 0.9603]

Value at Risk#

In the following we use a bisection search and QAE to efficiently evaluate the CDF to estimate the value at risk.

[23]:

def run_ae_for_cdf(x_eval, epsilon=0.01, alpha=0.05):

# construct amplitude estimation

state_preparation = get_cdf_circuit(x_eval)

problem = EstimationProblem(

state_preparation=state_preparation, objective_qubits=[len(qr_state)]

)

ae_var = IterativeAmplitudeEstimation(

epsilon_target=epsilon, alpha=alpha, sampler=Sampler(run_options={"shots": 100, "seed": 75})

)

result_var = ae_var.estimate(problem)

return result_var.estimation

[24]:

def bisection_search(

objective, target_value, low_level, high_level, low_value=None, high_value=None

):

"""

Determines the smallest level such that the objective value is still larger than the target

:param objective: objective function

:param target: target value

:param low_level: lowest level to be considered

:param high_level: highest level to be considered

:param low_value: value of lowest level (will be evaluated if set to None)

:param high_value: value of highest level (will be evaluated if set to None)

:return: dictionary with level, value, num_eval

"""

# check whether low and high values are given and evaluated them otherwise

print("--------------------------------------------------------------------")

print("start bisection search for target value %.3f" % target_value)

print("--------------------------------------------------------------------")

num_eval = 0

if low_value is None:

low_value = objective(low_level)

num_eval += 1

if high_value is None:

high_value = objective(high_level)

num_eval += 1

# check if low_value already satisfies the condition

if low_value > target_value:

return {

"level": low_level,

"value": low_value,

"num_eval": num_eval,

"comment": "returned low value",

}

elif low_value == target_value:

return {"level": low_level, "value": low_value, "num_eval": num_eval, "comment": "success"}

# check if high_value is above target

if high_value < target_value:

return {

"level": high_level,

"value": high_value,

"num_eval": num_eval,

"comment": "returned low value",

}

elif high_value == target_value:

return {

"level": high_level,

"value": high_value,

"num_eval": num_eval,

"comment": "success",

}

# perform bisection search until

print("low_level low_value level value high_level high_value")

print("--------------------------------------------------------------------")

while high_level - low_level > 1:

level = int(np.round((high_level + low_level) / 2.0))

num_eval += 1

value = objective(level)

print(

"%2d %.3f %2d %.3f %2d %.3f"

% (low_level, low_value, level, value, high_level, high_value)

)

if value >= target_value:

high_level = level

high_value = value

else:

low_level = level

low_value = value

# return high value after bisection search

print("--------------------------------------------------------------------")

print("finished bisection search")

print("--------------------------------------------------------------------")

return {"level": high_level, "value": high_value, "num_eval": num_eval, "comment": "success"}

[25]:

# run bisection search to determine VaR

objective = lambda x: run_ae_for_cdf(x)

bisection_result = bisection_search(

objective, 1 - alpha, min(losses) - 1, max(losses), low_value=0, high_value=1

)

var = bisection_result["level"]

--------------------------------------------------------------------

start bisection search for target value 0.950

--------------------------------------------------------------------

low_level low_value level value high_level high_value

--------------------------------------------------------------------

-1 0.000 1 0.752 3 1.000

1 0.752 2 0.959 3 1.000

--------------------------------------------------------------------

finished bisection search

--------------------------------------------------------------------

[26]:

print("Estimated Value at Risk: %2d" % var)

print("Exact Value at Risk: %2d" % exact_var)

print("Estimated Probability: %.3f" % bisection_result["value"])

print("Exact Probability: %.3f" % cdf[exact_var])

Estimated Value at Risk: 2

Exact Value at Risk: 3

Estimated Probability: 0.959

Exact Probability: 1.000

Conditional Value at Risk#

Last, we compute the CVaR, i.e. the expected value of the loss conditional to it being larger than or equal to the VaR. To do so, we evaluate a piecewise linear objective function \(f(L)\), dependent on the total loss \(L\), that is given by

To normalize, we have to divide the resulting expected value by the VaR-probability, i.e. \(\mathbb{P}[L \leq VaR]\).

[27]:

# define linear objective

breakpoints = [0, var]

slopes = [0, 1]

offsets = [0, 0] # subtract VaR and add it later to the estimate

f_min = 0

f_max = 3 - var

c_approx = 0.25

cvar_objective = LinearAmplitudeFunction(

agg.num_sum_qubits,

slopes,

offsets,

domain=(0, 2**agg.num_sum_qubits - 1),

image=(f_min, f_max),

rescaling_factor=c_approx,

breakpoints=breakpoints,

)

cvar_objective.draw()

[27]:

┌────┐

q159_0: ┤0 ├

│ │

q159_1: ┤1 ├

│ │

q160: ┤2 F ├

│ │

a83_0: ┤3 ├

│ │

a83_1: ┤4 ├

└────┘[28]:

# define the registers for convenience and readability

qr_state = QuantumRegister(u.num_qubits, "state")

qr_sum = QuantumRegister(agg.num_sum_qubits, "sum")

qr_carry = QuantumRegister(agg.num_carry_qubits, "carry")

qr_obj = QuantumRegister(1, "objective")

qr_work = QuantumRegister(cvar_objective.num_ancillas - len(qr_carry), "work")

# define the circuit

state_preparation = QuantumCircuit(qr_state, qr_obj, qr_sum, qr_carry, qr_work, name="A")

# load the random variable

state_preparation.append(u, qr_state)

# aggregate

state_preparation.append(agg, qr_state[:] + qr_sum[:] + qr_carry[:])

# linear objective function

state_preparation.append(cvar_objective, qr_sum[:] + qr_obj[:] + qr_carry[:] + qr_work[:])

# uncompute aggregation

state_preparation.append(agg.inverse(), qr_state[:] + qr_sum[:] + qr_carry[:])

[28]:

<qiskit.circuit.instructionset.InstructionSet at 0x7f109d5ac370>

Again, we first use quantum simulation to validate the quantum circuit.

[29]:

state_preparation_measure = state_preparation.measure_all(inplace=False)

sampler = Sampler()

job = sampler.run(state_preparation_measure)

binary_probabilities = job.result().quasi_dists[0].binary_probabilities()

[30]:

# evaluate the result

value = 0

for i, prob in binary_probabilities.items():

if prob > 1e-6 and i[-(len(qr_state) + 1)] == "1":

value += prob

# normalize and add VaR to estimate

value = cvar_objective.post_processing(value)

d = 1.0 - bisection_result["value"]

v = value / d if d != 0 else 0

normalized_value = v + var

print("Estimated CVaR: %.4f" % normalized_value)

print("Exact CVaR: %.4f" % exact_cvar)

Estimated CVaR: 3.7618

Exact CVaR: nan

Next we run QAE to estimate the CVaR.

[31]:

# set target precision and confidence level

epsilon = 0.01

alpha = 0.05

problem = EstimationProblem(

state_preparation=state_preparation,

objective_qubits=[len(qr_state)],

post_processing=cvar_objective.post_processing,

)

# construct amplitude estimation

ae_cvar = IterativeAmplitudeEstimation(

epsilon_target=epsilon, alpha=alpha, sampler=Sampler(run_options={"shots": 100, "seed": 75})

)

result_cvar = ae_cvar.estimate(problem)

[32]:

# print results

d = 1.0 - bisection_result["value"]

v = result_cvar.estimation_processed / d if d != 0 else 0

print("Exact CVaR: \t%.4f" % exact_cvar)

print("Estimated CVaR:\t%.4f" % (v + var))

Exact CVaR: nan

Estimated CVaR: 3.3316

[33]:

import tutorial_magics

%qiskit_version_table

%qiskit_copyright

Version Information

| Software | Version |

|---|---|

qiskit | 1.0.1 |

qiskit_aer | 0.13.3 |

qiskit_finance | 0.4.1 |

qiskit_algorithms | 0.3.0 |

| System information | |

| Python version | 3.8.18 |

| OS | Linux |

| Thu Feb 29 03:07:31 2024 UTC | |

This code is a part of a Qiskit project

© Copyright IBM 2017, 2024.

This code is licensed under the Apache License, Version 2.0. You may

obtain a copy of this license in the LICENSE.txt file in the root directory

of this source tree or at http://www.apache.org/licenses/LICENSE-2.0.

Any modifications or derivative works of this code must retain this

copyright notice, and modified files need to carry a notice indicating

that they have been altered from the originals.

[ ]: